The Desperate Hunt for the A.I. Boom’s Most Indispensable Prize

For the past year, Jean Paoli, chief executive of the artificial intelligence start-up Docugami, has been scrounging for what has become the hottest commodity in tech: computer chips.

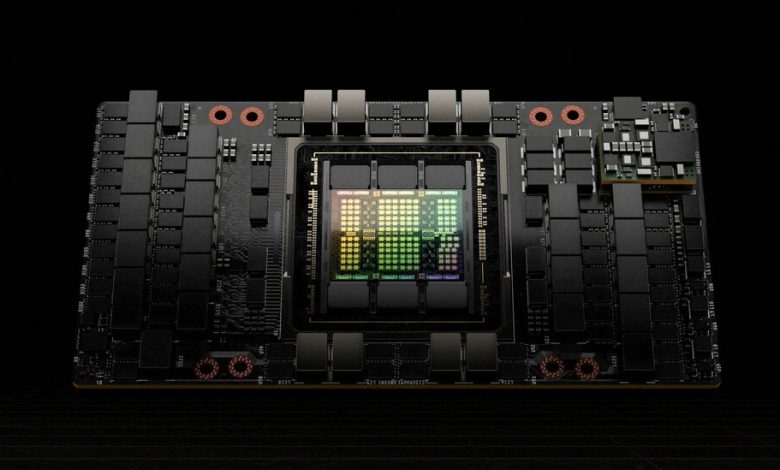

In particular, Mr. Paoli needs a type of chip known as a graphics processing unit, or GPU, because it is the fastest and most efficient way to run the calculations that allow cutting-edge A.I. companies to analyze enormous amounts of data.

So he’s called everyone he knows in the industry who can help. He’s applied for a government grant that allows access to the chips. He’s tried making Docugami’s A.I. technology more efficient so it requires fewer GPUs. Two of his scientists have even repurposed old video gaming chips to help.

“I think about it as a rare earth metal at this point,” Mr. Paoli said of the chips.

More than money, engineering talent, hype or even profits, tech companies this year are desperate for GPUs. The hunt for the essential component was kicked off last year when online chatbots like ChatGPT set off a wave of excitement over A.I., leading the entire tech industry to pile on and creating a shortage of the chips. In response, start-ups and their investors are now going to great lengths to get their hands on the tiny bits of silicon and the crucial “compute power” they provide.

The dearth of A.I. chips has been exacerbated because Nvidia, a longtime provider of the chips, has a virtual lock on the market. Inundated with demand, the Silicon Valley company — which has surged to a $1 trillion valuation — is expected to report record financial results next week.

Tech companies typically buy access to A.I. chips and their compute power through cloud computing services from the likes of Google, Microsoft and Amazon. That way, they do not have to build and operate their own data centers full of computer servers connected with specialized networking gear.

But the A.I. explosion has meant that there are long wait lists — stretching to almost a year in some cases — to access these chips at cloud computing companies, creating an unusual roadblock at a time when the tech industry sees nothing but opportunity and boundless growth for businesses building generative A.I., which can create its own images, text and video.

The largest tech firms can generally get their hands on GPUs more easily because of their size, deep pockets and market positions. That has left start-ups and researchers, which typically do not have the relationships or spending power, scrambling.

Their desperation is palpable. On social media, blog posts and conference panels, start-up founders and investors have started sharing highly technical tips for navigating the shortage. Some are gaming out how long they think it will take Nvidia’s wait-list to clear. There’s even a groan-worthy YouTube song, set to the tune of Billy Joel’s “We Didn’t Start the Fire,” in which an artist known as Weird A.I. Yankochip sings “GPUs are fire, we can never find ‘em but we wanna buy ‘em.”

Some venture capital firms, including Index Ventures, are now using their connections to buy chips and then offering them to their portfolio companies. Entrepreneurs are rallying start-ups and research groups together to buy and share a cluster of GPUs.

At Docugami, Mr. Paoli weighed the possibility of diverting GPU resources from research and development to his product, an A.I. service that analyzes documents. Two weeks ago, he struck gold: Docugami secured access to the computing power it needed through a government program called Access, which is run by the National Science Foundation, a federal agency that funds science and engineering. Docugami had previously won a grant from the agency, which qualified it to apply for the chip.

“That’s the life of a start-up when you need GPUs,” he said.

The lack of A.I. chips has been most acute for companies that are just starting out. In June, Eric Jonas left a job teaching computer science at the University of Chicago to raise money to start an A.I. drug discovery company. The scarce access to GPUs for university research projects had already been frustrating, but Mr. Jonas was shocked to discover it was just as hard for a start-up, he said.

“It’s the Wild West,” he said. “There is literally no capacity.”

Mr. Jonas said he considered a range of undesirable options, including using older, less powerful chips and setting up his own data center. He also toyed with using chips from a friend’s Bitcoin mining rig — a computer designed to do the calculations that produce the digital currency — but figured that would create more work since those chips were not programmed for the kind of work required by A.I.

For now, Mr. Jonas is calling in favors from friends at large equipment vendors and people who work at quantitative stock trading firms that might have extra GPUs or test labs that have GPUs that he could use. He said he did not need much — just 64 GPUs for six hours at a time.

The strain is what recently prompted two founders, Evan Conrad and Alex Gajewski, to start the San Francisco Compute Group, a project that plans to let entrepreneurs and researchers buy access to GPUs in small amounts. After hundreds of emails and a dozen phone calls to cloud companies, equipment makers and brokers, they announced last month that they had secured 512 of Nvidia’s H100 chips and would rent them out to interested parties.

The announcement went “hilariously viral,” Mr. Conrad said, and resulted in hundreds of messages from founders, graduate students and other research organizations.

Mr. Conrad and Mr. Gajewski now plan to raise $25 million in a specialized kind of debt that uses the computer chips as collateral. Their vendor, whom the founders declined to name for fear that someone would swoop in and buy the GPUs out from under them, has promised access in around a month.

The duo said they hoped to help start-ups save money by buying only the computing power they need to experiment, rather than making large, yearslong commitments.

“Otherwise, the incumbents all win,” Mr. Conrad said.

Venture capital investors have a similar goal. This month, Index Ventures struck a partnership with Oracle to provide a mix of Nvidia’s H100 chips and an older version, called A100, to its very young portfolio companies at no cost.

Erin Price Wright, an Index Ventures investor, said the firm had seen its start-ups struggle to navigate the complicated process of getting computing power and landing in wait lists that were as long as nine months. Two companies are set to use the firm’s new program, with others expressing interest.

Before the shortage, George Sivulka, chief executive of Hebbia, an A.I. productivity software maker, simply asked his cloud provider for more “instances,” or virtual servers full of GPUs, as the company expanded. Now, he said, his contacts at the cloud companies either don’t respond to his requests or add him to a four-month wait list. He has resorted to using customers and other connections to help make his case to the cloud companies. And he’s constantly on the lookout for more.

“It’s almost like talking about drugs: ‘I know a guy who has H100s,’” he said.

Several months ago, some Hebbia engineers set up a server with some less-efficient GPUs in the company’s Manhattan office, parked the machine in a closet and used it to work on smaller projects. Liquid cooling units keep the server from overheating, Mr. Sivulka said, but it’s noisy.

“We shut the door,” he said. “No one sits next to it.”

The scarcity has created a stark contrast between the haves and have-nots. In June, Inflection AI, an A.I. start-up in Palo Alto, Calif., announced it had acquired 22,000 of Nvidia’s H100 chips. It also said it raised $1.3 billion from Microsoft, Nvidia and others. Mustafa Suleyman, Inflection’s chief executive, said in an interview that the company planned to spend at least 95 percent of the funds on GPUs.

“It’s a seismic amount of compute,”hesaid. “It’s just eye-watering.”

Other start-ups have asked him to share, he said, but the company is already at full capacity.